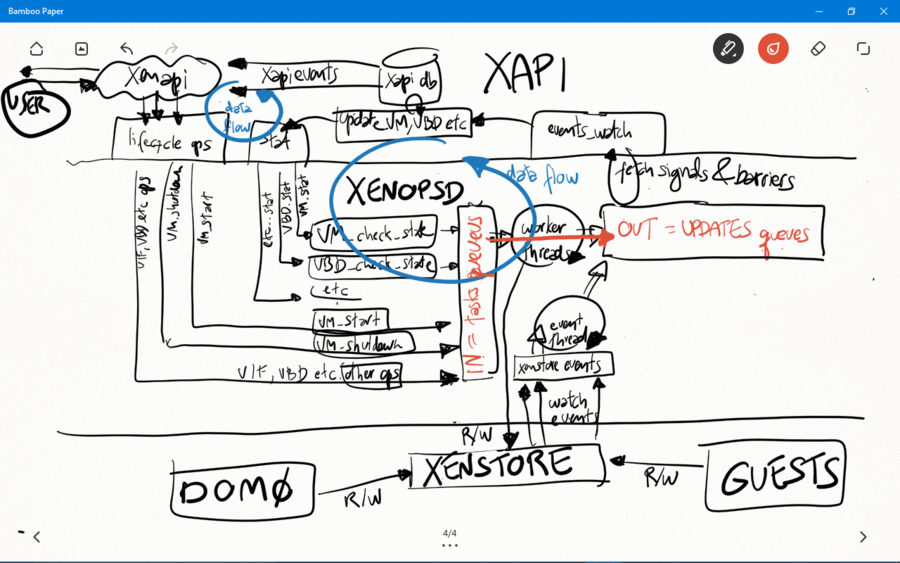

Xapi, xenopsd and xenstore use a number of different events to obtain indications that some state changed in dom0 or in the guests. The events are used as an efficient alternative to polling all these states periodically.

Given a choice between polling states and receiving events when the state change, we should in general opt for receiving events in the code in order to avoid adding bottlenecks in dom0 that will prevent the scalability of XenServer to many VMs and virtual devices.

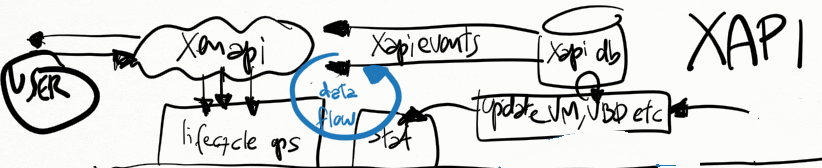

A xenapi user client, such as XenCenter, the xe-cli or a python script, can register to receive events from XAPI for specific objects in the XAPI DB. XAPI will generate events for those registered clients whenever the corresponding XAPI DB object changes.

This small python scripts shows how to register a simple event watch loop for XAPI:

import XenAPI

session = XenAPI.Session("http://xshost")

session.login_with_password("username","password")

session.xenapi.event.register(["VM","pool"]) # register for events in the pool and VM objects

while True:

try:

events = session.xenapi.event.next() # block until a xapi event on a xapi DB object is available

for event in events:

print "received event op=%s class=%s ref=%s" % (event['operation'], event['class'], event['ref'])

if event['class'] == 'vm' and event['operatoin'] == 'mod':

vm = event['snapshot']

print "xapi-event on vm: vm_uuid=%s, power_state=%s, current_operation=%s" % (vm['uuid'],vm['name_label'],vm['power_state'],vm['current_operations'].values())

except XenAPI.Failure, e:

if len(e.details) > 0 and e.details[0] == 'EVENTS_LOST':

session.xenapi.event.unregister(["VM","pool"])

session.xenapi.event.register(["VM","pool"])

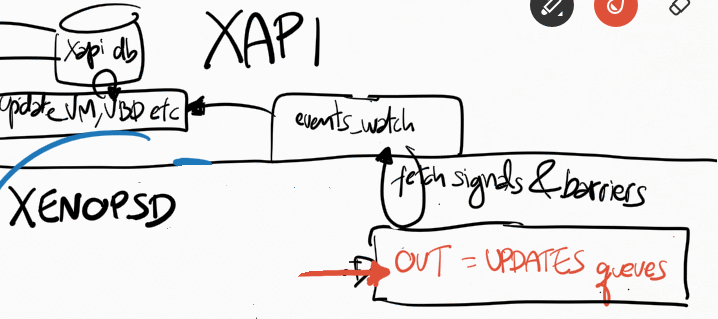

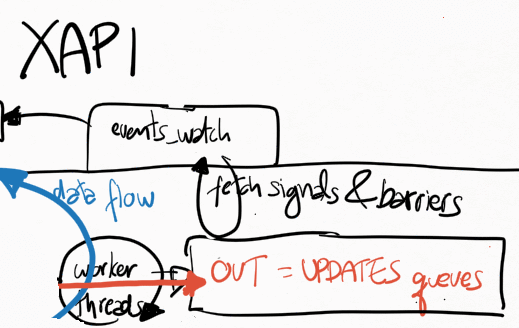

Xapi receives all events from xenopsd via the function xapi_xenops.events_watch() in its own independent thread. This is a single-threaded function that is responsible for handling all of the signals sent by xenopsd. In some situations with lots of VMs and virtual devices such as VBDs, this loop may saturate a single dom0 vcpu, which will slow down handling all of the xenopsd events and may cause the xenopsd signals to accumulate unboundedly in the worst case in the updates queue in xenopsd (see Figure 1).

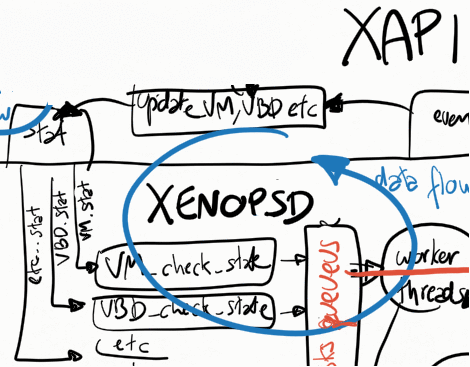

The function xapi_xenops.events_watch() calls xenops_client.UPDATES.get() to obtain a list of (barrier, barrier_events), and then it process each one of the barrier_event, which can be one of the following events:

All the xapi_xenops.update_X() functions above will call Xenopsd_client.X.stat() functions to obtain the current state of X from xenopsd:

There are a couple of optimisations while processing the events in xapi_xenops.events_watch():

When xapi needs to execute (and to wait for events indicating completion of) a xapi operation (such as VM.start and VM.shutdown) containing many xenopsd sub-operations (such as VM.start – to force xenopsd to change the VM power_state, and VM.stat, VBD.stat, VIF.stat etc – to force the xapi DB to catch up with the xenopsd new state for these objects), xapi sends to the xenopsd input queue a barrier, indicating that xapi will then block and only continue execution of the barred operation when xenopsd returns the barrier. The barrier should only be returned when xenopsd has finished the execution of all the operations requested by xapi (such as VBD.stat and VM.stat in order to update the state of the VM in the xapi database after a VM.start has been issued to xenopsd).

A recent problem has been detected in the xapi_xenops.events_watch() function: when it needs to process many VM_check_state events, this may push for later the processing of barriers associated with a VM.start, delaying xapi in reporting (via a xapi event) that the VM state in the xapi DB has reached the running power_state. This needs further debugging, and is probably one of the reasons in CA-87377 why in some conditions a xapi event reporting that the VM power_state is running (causing it to go from yellow to green state in XenCenter) is taking so long to be returned, way after the VM is already running.

Xenopsd has a few queues that are used by xapi to store commands to be executed (eg. VBD.stat) and update events to be picked up by xapi. The main ones, easily seen at runtime by running the following command in dom0, are:

# xenops-cli diagnostics --queue=org.xen.xapi.xenops.classic

{

queues: [ # XENOPSD INPUT QUEUE

... stuff that still needs to be processed by xenopsd

VM.stat

VBD.stat

VM.start

VM.shutdown

VIF.plug

etc

]

workers: [ # XENOPSD WORKER THREADS

... which stuff each worker thread is processing

]

updates: {

updates: [ # XENOPSD OUTPUT QUEUE

... signals from xenopsd that need to be picked up by xapi

VM_check_state

VBD_check_state

etc

]

} tasks: [ # XENOPSD TASKS

... state of each known task, before they are manually deleted after completion of the task

]

}

Whenever xenopsd changes the state of a XenServer object such as a VBD or VM, or when it receives an event from xenstore indicating that the states of these objects have changed (perhaps because either a guest or the dom0 backend changed the state of a virtual device), it creates a signal for the corresponding object (VM_check_state, VBD_check_state etc) and send it up to xapi. Xapi will then process this event in its xapi_xenops.events_watch() function.

These signals may need to wait a long time to be processed if the single-threaded xapi_xenops.events_watch() function is having difficulties (ie taking a long time) to process previous signals in the UPDATES queue from xenopsd.

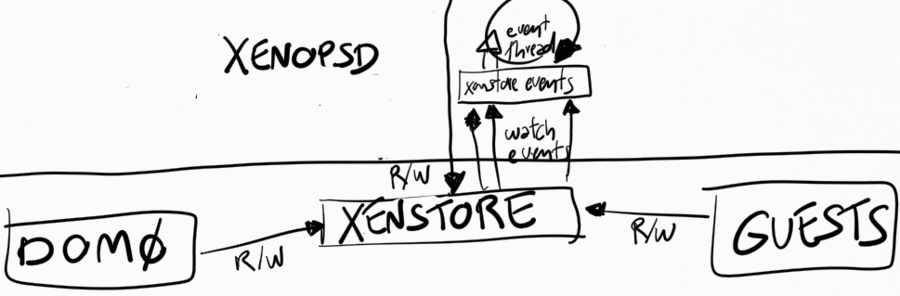

Xenopsd watches a number of keys in xenstore, both in dom0 and in each guest. Xenstore is responsible to send watch events to xenopsd whenever the watched keys change state. Xenopsd uses a xenstore client library to make it easier to create a callback function that is called whenever xenstore sends these events.

Xenopsd also needs to complement sometimes these watch events with polling of some values. An example is the @introduceDomain event in xenstore (handled in xenopsd/xc/xenstore_watch.ml), which indicates that a new VM has been created. This event unfortunately does not indicate the domid of the VM, and xenopsd needs to query Xen (via libxc) which domains are now available in the host and compare with the previous list of known domains, in order to figure out the domid of the newly introduced domain.

It is not good practice to poll xenstore for changes of values. This will add a large overhead to both xenstore and xenopsd, and decrease the scalability of XenServer in terms of number of VMs/host and virtual devices per VM. A much better approach is to rely on the watch events of xenstore to indicate when a specific value has changed in xenstore.

If a xenstore client has created watch events for a key, then xenstore will send events to this client whenever this key changes state.

Xenstore clients indicate to xenstore that something state changed by writing to some xenstore key. This may or may not cause xenstore to create watch events for the corresponding key, depending on if other xenstore clients have watches on this key.